Concurrent Programming: A Taxonomy

How do you picture concurrent programs in your mind? Do you think of a graph with threads as branches, forking and joining? Do you conceive of them as assembly lines, with data being molded into different shapes at each station? Or do you think of threads as actors, like the members of a sports team, passing data around like a football?

There’s a rich design space for modelling concurrency. Depending on the specifics of your problem, some mental models will fit more naturally than others. For each model, in turn, specialized frameworks and libraries are at your disposal. Let’s take a look at what your options are.

Some history

When the US Defense Department and American elite universities were taking their first baby steps into the world of computing, computers could do one thing at a time.

In the 1940s, Harvard University developed the Mark I. This 15 meter long, 50-ton beast was able to perform a whopping three additions per second. If you wanted to apply its computational power to your computation, you had to sign up in a calendar. During the reserved time slot, the Mark I was all yours to feed with punch cards of your choosing.

In the 60s, the IBM 7090 came around; no signing up was necessary anymore. If you needed a job done, you handed your punch cards over to the operations team that ran their 7090 from a dedicated, air-conditioned room; then you went off to get a coffee. The operations team collected work for several hours, then transferred them all onto a magnetic tape, and had the 7090 process them sequentially, loading each jobs program and data in turn — like a typical batch-processing system would. The results were written to an output tape and printed. Users could pick up their printouts a couple of hours later. Debugging must have been a nightmare, though. Imagine forgetting a semicolon and only finding out hours later when getting your printouts.

The Amiga 1000, introduced in 1985, marked a leap in computing, being the first personal computer with preemptive multitasking capabilities. Unlike earlier systems that processed tasks one after another or required users to manage the switching, AmigaOS could juggle multiple programs seamlessly, ensuring each got its fair share of CPU time without any user intervention. This meant you could compose music, edit graphics, and write letters all at once — assuming you didn’t mind juggling floppy disks. Multiprogramming was here to stay; but initially, the abstractions that evolved around it — think processes and virtual memory — didn’t spill into applications.

In the early 2000s however, chip designers, hard at work to meet the expectation of an exponential increase in computing power promised by Moore’s Law, tripped up. Moore’s Law, first articulated by Gordon Moore in 1965, predicted that the number of transistors on a chip would double approximately every two years, leading to a steady rise in performance. For decades, this held true as processors became faster largely by increasing their clock speeds, allowing them to execute more instructions per second.

But by the early 2000s, this approach hit a wall. Higher clock speeds led to problems with heat dissipation — processors consumed so much energy and generated so much heat that cooling systems struggled to keep up.

Propagation delays also became a limiting factor. As clock speeds increased, the amount of time it took for signals to propagate across the chip did not decrease. This propagation delay meant that signals could not reach all parts of the chip within a single clock cycle, effectively limiting how much faster chips could be clocked.

To continue delivering performance improvements, chip designers shifted focus from single-core processors to multi-core CPUs, integrating multiple processing units on a single chip. While this approach unlocked new performance gains, it required a paradigm shift: software had to actively take advantage of these cores. This is when multiprogramming awareness trickled down into applications. Developers had to adopt concurrent programming models, with abstractions like threads and parallel algorithms becoming essential to harness the power of multi-core architectures.

There’s the old joke we used to tell each other in high school — if only you lived in ancient Rome, there would have been less history to study. In the same way, if you had been a programmer in the 90s, you arguably wouldn’t have had to look into concurrency. But in today’s world of multicore processors and distributed systems, concurrency is no longer a dark art practiced only by system programmers and geeks. It is, for better or worse, everywhere. Concurrency is hard to grapple with, which has led people to develop a plethora of frameworks and language features to model concurrent programs.

The taxonomy

Processes

Let’s start at the beginning: A process is an independent program in execution, complete with its own memory space, program counter, and system resources. Processes are isolated from one another, meaning one process cannot directly access another’s memory or resources, making them robust but relatively heavy to create and manage. Communication between processes, when needed, typically relies on mechanisms like inter-process communication (IPC).

You can build perfectly decent concurrent programs with old-school processes, and have them communicate via IPC. This style of concurrency is often called shared-nothing concurrency, because the processes are loosely coupled, coordinating via messages.

Case in point: Postgres, the popular relational database system. Postgres decomposes into multiple subprocesses, each handling a specific subproblem independently.

The postmaster root process starts a series of specialized background processes: the logger; the vacuum launcher, which cleans up the heap; the checkpointer, which periodically writes the current state of the database to disk; and a dedicated backend process for each client connection.

Sometimes, however, different workflows need to coordinate closely. Then, shared-nothing fails us. The Apache HTTP Server originally adopted a process-per-request model, that spawned a new process per incoming HTTP request. As web traffic grew in volume, this model became impractical, due to its high memory- and context switching overhead.

Threads

Threads are also known as “lightweight processes.” They are smaller units of execution that exist within the same process, sharing its memory space and many of its resources. The motivation for using threads often is grounded in the desire to avoid interactions with the operating system which necessitate context switches to the kernel. Kernel switches are an operation where the CPU saves the state of the current process and loads the state of the kernel. This entails cache invalidation and disk I/O, overhead we would rather avoid.

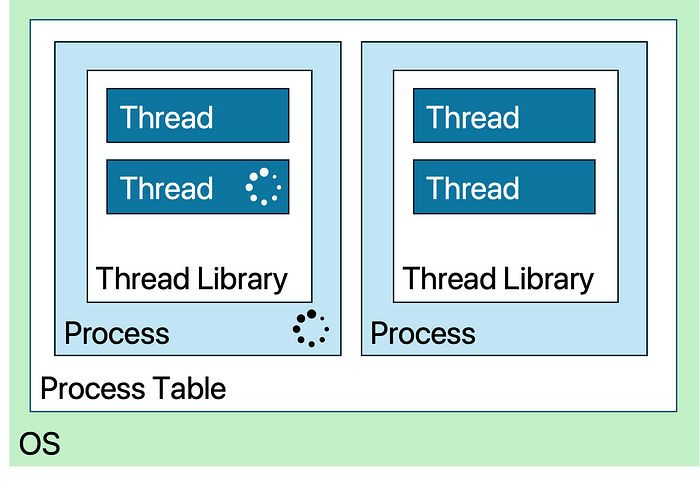

Library Threads

One way of avoiding the operating system is to implement threads as a library and have them become part of the program that uses them. After all, threading is not much more than the Inversion of Control pattern in action. Essentially, your threads are just callbacks registered with the library, to which the library dispatches control according to a scheduling policy. This doesn’t require any “OS magic” and works entirely within the process. However, there are limitations.

Unlike the OS, which uses periodic interrupts to regain control from running threads, a userspace threading library cannot forcefully interrupt threads. To switch between threads, a thread must voluntarily yield control by calling some function, often called yield(), provided by the library. This introduces the risk of forgotten or poorly timed yields, which can lead to performance bottlenecks or even deadlocks. Not ideal, but something disciplined programmers can handle.

There’s another problem: If a userspace thread makes a blocking system call (e.g. to read from a file or wait on a network socket), the entire process, including all its threads, are blocked. The OS has no knowledge of the individual userspace threads and therefore cannot schedule any of the threads if just one of them blocks. This can be mitigated by using non-blocking system calls, like poll() instead of read(), though this adds complexity to the program.

In the same vein, the operating system’s unawareness of user-level threads makes it impossible to schedule individual threads onto different cores. User-level threads are still useful as a way of factoring your program, making it more readable and maintainable, but it makes true parallelism impossible. In a multicore world, this is an unacceptable handicap. We’ll have to look for alternatives.

Kernel-Space Threads

An alternative is to implement threading directly in the kernel. In this model, the OS is aware of individual threads, and threads, rather than processes, become the unit of scheduling. Processes still exist as resource containers (for memory, file handles, etc.), but the kernel manages threads directly.

This approach resolves the issues inherent to userspace threading. Preemption is handled by the kernel, which can forcibly switch between threads based on a timer or other interrupts. Blocking calls only block the thread that issued the call, not the entire process. However, the overhead that userspace threads sought to avoid is back — every context switch between threads involves a kernel transition, which is more expensive than userspace thread switching.

Hybrid Models

Modern systems often adopt a hybrid approach. Here, kernel threads are used as the foundational scheduling units, but a userspace threading library is layered on top to manage user-level threads. The library maps multiple user-level threads onto fewer kernel-level threads, combining the benefits of both models. Kernel-level threads can be thought of as representing CPU cores, while user-level threads represent work that can be moved onto and off cores.

Synchronization

While sharing a processes resources makes threads faster to create and switch between, it also introduces complexity: now that multiple flows of execution roam the same memory space, coordination is indispensable to prevent unwanted interactions.

One way for threads to interact weirdly is to edit the same object at a time, in incompatible ways. We can preclude these race conditions and make our code thread-safe by protecting sensitive regions of the code with locks. Locks are one of the most common synchronization mechanisms used to create critical sections, sections of code into which at most one thread is allowed at a time. The downside of locks is the possibility of deadlocks, where two or more threads are stuck waiting for each other to release their respective locks. It is also difficult to prevent contention, where some single lock is the popular kid on the block and wants to be acquired by everybody. A queue forms, potentially degrading performance.

Lock-free code offers an interesting alternative to traditional locks. In a lock-free algorithm, threads never block, which eliminates the risk of deadlocks. Lock-free algorithms often rely on Compare-And-Swap (CAS), a hardware-supported atomic operation that allows updating a variable without ever forcing a thread to wait. It is used by many lock-free data structures, such as atomic counters and concurrent queues, and is attractive in highly concurrent environments where lock contention would degrade performance. However, a downside to CAS is the potential for livelock, where threads continuously retry and fail, leading to wasted CPU cycles.

Visibility is also an issue when multiple cores cooperate. Each core caches data in its private memory, where it is invisible to other cores unless flushed into shared memory. Memory barriers are used to ensure a consistent view of memory across threads. Java’s synchronized acts both as lock and as memory barrier: It protects a critical region of code, and for whoever enters, it guarantees that the most up-to-date version of the data is visible.

Task parallelism and Object-orientation

The object-oriented way of making your code thread-safe is to think about upholding contracts and invariants. In a single-threaded environment, it is an object’s job to keep its internal state consistent; why would it be any different in a multi-threaded environment? If concurrent access to your classes can break an invariant, you need to adapt your implementation accordingly by building some kind of synchronization into the class.

When your code is threadsafe, you can scale vertically: Multiple threads can run the same code without worrying about interference.

Pipeline parallelism and managed concurrency

An alternative way of doing concurrency is to divorce the work that is to be done from the entity that will perform it. In such a setting, developers write concurrency-agnostic code. They split their program into units of work that are sensible from a business perspective and feed them to a framework that is concerned just with managing concurrent execution.

Eviction of concurrency management from business code necessarily implies that business code does not need to be thread safe — how convenient! Recall that, when we used task parallelism, multiple threads were roaming through the same address space, which requires us to build synchronization into our classes. Managed concurrency frameworks, in contrast, are designed around the idea of ownership. A unit of work is owned by one thread at a time, and only the owning thread may touch the unit — we say the unit is confined to its owner. Ownership can pass from one thread to another only at well-defined moments, at which the framework takes care of synchronization.

This mindset gives rise to constructs like Java’s ExcecutorService, an abstraction that encapsulates some policy for running stuff. Typically, though not necessarily, an ExecutorServiceinternally manages a thread pool containing a number of workers. When running work, it can impose timeouts to prevent long-running tasks from hogging resources. Load-shedding, retrying or custom prioritization are possible as well. Or associating limited resources like database connections with the individual threads, giving the guarantee that, as soon as a workload is scheduled, it has exclusive rights to the threads connection.

Kotlin’s Dispatchers essentially are long-lived, predefined ExecutorServices, but with a unique twist compared to Java’s thread pools. Instead of submitting tasks by calling a submit function, coroutines are bound to Dispatchers essentially by annotating them with a dispatcher’s name. Dispatchers.IO is for running I/O-bound tasks, Dispatchers.Defaulthandles CPU-intensive ones. This makes control flow appear more linear and improves on Java’s verbosity. Coroutines also make clever use of Kotlin’s language features to implement structured concurrency — this approach ties the lifecycle of a concurrent task to the lifecycle of its parent and helps prevent resource leaks.

Reactive programming makes clear why horizontal parallelism is often called pipeline parallelism. Your code becomes undeniably pipeline-shaped: The program is broken down into units of often no more than a line in size. Each unit receives data from the unit preceding it in the pipeline, and passes it on to the next after processing. These pipelines are inherently non-blocking, with each stage running on a thread chosen by the framework (though that choice can be mediated by indicating a Scheduler).

In reality of course, no problem or program is so pure as to require the use of just a single of the approaches described above. You’re meant to mix and match, depending on the particular problem you’re facing. If you’re still unsure about which paradigm best suits the particular problem you’re facing, or if you need help with applying the paradigm you’ve decided on, me and my colleagues at & are happy to help! We have plenty of experience with designing and building concurrent solutions. Just shoot me a mail at florian.wicher@andamp.io

Until then, happy programming 🙂