Why Breaking Up Your Monolith Can Kill Your Project

In today’s fast-paced tech world, the buzz around monoliths and microservices continues to grow. The allure of “Extreme Agile Cloud Native Digital Microservices” seems irresistible, and many companies are eager to abandon their monolithic legacy systems. The prevailing notion is that monoliths are no longer sustainable, being burdened with maintenance challenges and gradually falling into disrepair. Moreover, they are perceived as outdated relics of the past.

Perhaps you’ve come across advertisements or heard colleagues talk about the necessity of ditching monoliths and embracing the wonders of microservices. It’s not uncommon to encounter claims like, “Microservices are the future!” or “Everyone is doing microservices these days”. Such statements often stem from a desire to stay competitive and align with the “latest” industry trends.

However, before diving headfirst into the world of microservices and abandoning your monolith, it’s crucial to approach the decision with careful consideration. Migrating from a monolith to microservices is a complex and challenging endeavor, fraught with potential pitfalls. While microservices can offer numerous advantages, they are not a one-size-fits-all solution.

One of the most critical aspects to ponder is the possible introduction of errors during the migration process. Breaking up a monolith into microservices demands meticulous planning and a deep understanding of the existing system’s intricacies. Failing to account for all dependencies and interactions can result in unforeseen issues that might cripple your project.

So, should you proceed with the migration, or is it better to stick with your monolith? In this blog post, we’ll discuss pitfalls that should be avoided at all costs when breaking up a monolith. We’ll also talk about essential considerations that can help you decide whether a migration is the right choice for your specific case. Ultimately, the key is to make an informed decision that aligns with your project’s goals and needs, rather than blindly following industry trends. Lets start with the first mistake you can’t afford to make!

Not considering microservice drawbacks

When it comes down to it, transitioning to microservices can be a lot more cumbersome than sticking with the familiar monolith. For example, in a monolith, method invocations are simple and happen within the same process, but with microservices, everything becomes network-dependent. This network communication can lead to performance issues, especially if the services are not well-designed or the network itself is unreliable.

Network calls introduce new complexities, such as handling potential failures. If a network call fails, you now need to implement a retry mechanism to ensure the operation eventually succeeds. Additionally, when dealing with distributed services, you must consider scenarios where other services might be down or experiencing issues. This requires implementing strategies like load balancers and circuit breakers to handle such situations gracefully.

Another significant challenge arises when dealing with errors. In a monolith, tracking down issues is relatively straightforward as you have a single deployable and a single log to inspect. However, with microservices, things get complicated quickly. You may find yourself sifting through five different logs at once, especially if you haven’t integrated log aggregation tools like Fluentd or ELK (Elasticsearch, Logstash, Kibana) yet. This lack of centralized logging can be a real headache when trying to troubleshoot errors effectively. Moreover, monitoring and alerting become less straightforward in a microservices architecture. With multiple services running independently, ensuring a comprehensive monitoring system becomes essential. Identifying potential bottlenecks or performance issues across various microservices requires a more thoughtful approach.

Even the once trivial CI/CD (Continuous Integration and Continuous Delivery) pipeline becomes more intricate. As microservices need to be independently built, tested, and deployed, managing the CI/CD pipeline demands extra effort and coordination.

Existing integration tests, once a matter of routine, undergo a paradigm shift. Network calls interject into the equation, coupled with the need to initiate and orchestrate auxiliary services.

Cost is another significant consideration. While microservices offer numerous benefits, they do come at a price. The infrastructure required to support a microservices architecture can be considerably more expensive than running a simple monolith. Each service needs its resources and infrastructure, which can quickly add up, especially as the system scales.

The realm of data consistency and distributed transactions, while navigable, presents its own array of complexities that necessitate careful consideration and adaptation.

Certainly, these challenges are not insurmountable, and there are solutions to address them. However, it’s crucial to be aware that many aspects of development, testing, deployment, and monitoring are not as straightforward as they were with a monolith. The increased overhead demands careful planning and a keen understanding of the microservices architecture.

As we touched on earlier, microservices do offer a multitude of benefits, but those advantages come with the caveat that they are most valuable when you truly need them. Otherwise, you might find yourself burdened with the disadvantages without reaping the rewards. Before making the leap, consider your project’s specific needs and carefully evaluate whether the benefits of microservices outweigh the increased complexities they introduce.

And that brings me to the next pitfall when trying to break up a monolithic system: Having the wrong reasons or in other words, not having a good reason.

Not having a good reason

Are microservices better than a monolith? This seemingly straightforward question becomes complex upon closer inspection. Looking at the bigger picture, a more fundamental question emerges: What reasons support each architectural path?

On one hand, favoring a monolith has valid points in terms of technical simplicity. A single deployable unit streamlines development, testing, and refactoring. On the other side, microservices offer benefits like tailored scalability, improved fault tolerance, and a decoupled, technology-flexible setup. But this path brings challenges: comprehensive system testing becomes tougher due to more components, and refactoring, especially when detaching microservices, requires careful handling.

However, my focus goes beyond a mere technical comparison of pros and cons. The real challenge is grasping the nuanced implications. The fundamental question looms large: Do the merits of microservices genuinely align with my project’s imperatives, justifying the embrace of heightened complexity? The key is to determine the architectural route that resonates most cohesively with my specific use case. It is imperative to recognize that solid reasoning must underscore any architectural choice. The heart of this decision-making lies in understanding why a certain path is chosen — a factor that shouldn’t be underestimated. What genuine issue does microservices resolve? If this question lacks a compelling answer, a reevaluation of the necessity for microservices becomes crucial. In cases of uncertainty, the default might reasonably be a monolith, maintaining the status quo.

Furthermore, it’s worth exploring alternatives. Is my aim centered solely on achieving greater modularity and refining module cohesion? Can a modular monolith potentially serve the same purpose? Particularly at the inception of a project, where domain intricacies remain unclear, starting with a monolith can be judicious. For instance, leveraging Maven packages or the Spring Modulith approach.

For instance, what constitutes sound reasons for embracing microservices? According to Sam Newman, the top three include:

- Zero-downtime independent deployability: For instance, in a software-as-a-service business model, where continuous deployment of new features is vital, microservices offer the ability to deploy independently and roll back seamlessly in case of glitches.

- Isolation of data and processing: Sensitive data can be confined within a microservice, never leaving its confines. Industries like healthcare, subject to stringent data regulations, can benefit from such isolation.

- Enhanced organizational autonomy: Microservices can be aligned with organizational structures, enabling individual teams to manage specific microservices. This autonomy reduces coordination overhead and promotes more streamlined workflows.

This brings us to a pertinent mistake — failing to consider the organizational structure when making architectural decisions.

Not considering the organizational structure

As previously highlighted, microservices can be strategically aligned with an organization’s structure, allocating specific teams to manage distinct microservices. This configuration offers a pronounced advantage — teams can operate with greater autonomy, and the need for extensive coordination with other organizational components is curtailed.

In instances where a small team, consisting of only 5 individuals (consisting of a product owner, a business analyst, two devs and a tester), is tasked with overseeing an entire software product, the viability of microservices comes into question. Drawing from personal experience, I contend that, in such scenarios, microservices may be counterproductive, introducing an unwarranted overhead that impedes efficiency. Furthermore, the developing experience is compromised as even for minor bug fixes require engagement with multiple repositories.

As team sizes expand, the complexities of orchestrating deployments grow exponentially. The introduction of barriers, as facilitated by microservices, becomes a necessary countermeasure. For example, within larger enterprises such as banking institutions, a microservices architecture finds its footing. This approach optimally delegates responsibilities among teams, augmenting autonomy and enabling independent action. In the ideal scenario, these teams can develop and deliver software autonomously, unfettered by the need for constant cross-team coordination.

Conway’s Law posits that system designs mirror an organization’s communication structures. Succinctly put, small distributed teams are predisposed to crafting a web of small, distributed systems, while larger teams tend to give rise to monolithic entities. My own experiences corroborate this, revealing that attempts to challenge this principle often meet resistance, as it runs contrary to the natural flow. I’ve personally encountered instances where suboptimal communication channels and the involvement of multiple teams in a feature implementation resulted in incomplete or bug-ridden outcomes.

In essence, a profound lesson surfaces: the organizational structure holds the key when contemplating the implementation of microservices. A harmonious alignment between architecture and organization is an imperative consideration.

Not avoiding a distributed monolith

Creating a distributed monolith instead of well-defined microservices can indeed be problematic and counterproductive. It essentially means that although you have split your monolithic application into different services, they still remain tightly coupled, and the benefits of microservices are not fully realized. This situation can lead to several challenges and negate the advantages you intended to gain through microservices.

The problems associated with a distributed monolith are as follows:

- Increased complexity and minimal benefits: If services are tightly coupled and interdependent, the overhead of managing multiple services remains, but the advantages of individual scalability and fault tolerance are not achieved. This means you are burdened with the complexities of microservices without reaping their full benefits.

- Tight coupling and deployment challenges: When services are tightly coupled, making changes to one service can often necessitate changes in other services as well. This coupling reduces the independence of services, and deploying them individually becomes difficult, as updates in one service may require redeploying multiple services.

- Inability to scale independently: In a distributed monolith, services may not scale independently, as increased traffic in one service can cascade to other services, causing potential performance bottlenecks across the system.

- Impact on fault tolerance: Network calls between services introduce new points of failure. If a service becomes unavailable, it may affect other dependent services, leading to a degradation in the overall system’s fault tolerance.

- Challenges for development teams: In a distributed monolith, teams may struggle to work agnostically and independently, as service boundaries are not clearly defined. This can lead to coordination challenges and hinder teams’ autonomy.

In contrast, well-defined microservices with clear service boundaries enable teams to work independently, scale services individually, and improve fault tolerance. The modularity and autonomy offered by proper microservices architecture are the key factors that make them an attractive solution for organizations seeking to benefit from this architectural style.

A distributed monolith has all the disadvantages of a distributed system, and the disadvantages of a single-process monolith, without having enough of the upsides of either. — Sam Newman

Preventing the emergence of a distributed monolith in your microservices architecture involves careful planning and adherence to key software design principles. Just like in object-oriented programming and modularity, the concepts of information hiding, high cohesion, and low coupling are crucial in designing effective microservices.

Another potent strategy to preempt this scenario is embracing domain-driven design. This entails preemptively delineating service boundaries, aligning architecture with the inherent structure of your domain.

In the following sections, we’ll explore the concept of entity-based microservices in greater depth, shedding light on its implications and strategies to navigate around its potential pitfalls.

Not considering your data when splitting the code

A common misstep arises when microservices are introduced without a comprehensive assessment of their data interactions. Allowing multiple microservices to share a common database may inadvertently resurrect an artful distributed monolith, albeit indirectly.

This prompts an essential question: Why should we be concerned? An apt comparison can be drawn from the Star Wars narrative, where we see the unfortunate fate of the Death Star, ultimately leading to its explosive end.

The integration of microservices with a shared database brings about certain risks. Any changes made to the database have a ripple effect on multiple microservices, necessitating coordinated adjustments. The complex landscape of database migration adds another layer of complexity to the situation.

Removing data from a relational database is far from straightforward since the core essence of a relational database lies in establishing connections between different elements. As a result, untangling data often results in fractured relationships, potentially eroding the inherent advantages of a Relational Database Management System (RDBMS). This gives rise to a variety of challenges:

- Performance: The need for joins and foreign key relationships might impact performance. While caching can mitigate this, heightened latency remains a concern.

- Data Integrity: Enforced foreign key relationships and record deletions may confront data integrity. Employing techniques like soft deletes or referencing names instead of IDs can offer mitigation.

- Transactions: The intricacies of distributed transactions come to the forefront. Coordinating updates across separate databases necessitates a two-phase commit algorithm, potentially compromising ACID properties. Deadlocks and loss of atomicity become pressing concerns.

Hence, distributed transactions should be approached cautiously, if at all. Alternative strategies, such as restructuring microservices or implementing long-lived transactions (LLTs) through saga patterns, warrant exploration.

Decomposition without a clear vision

While overlooking data implications is a pitfall, the broader scope demands a strategic outlook on the decomposition process itself. Arbitrarily fragmenting components into disparate services seldom proves advantageous. Instead, a purposeful and methodical approach is essential.

The initial consideration revolves around the sequence: should code or data be divided first? Each route harbors its pros and cons. Opting for the data-oriented path is prudent when uncertainty looms over data sharing, as this approach can be retained within the monolith.

Starting with the segment that offers the greatest value upon extraction, along with being relatively easy to extract, is a sensible strategy. This implies identifying the part of the monolith that provides the most value after extraction and can be extracted without much difficulty.

Decomposition strategies:

- Begin with simplicity: A preference might steer you toward initiating with encapsulated, uncomplicated portions.

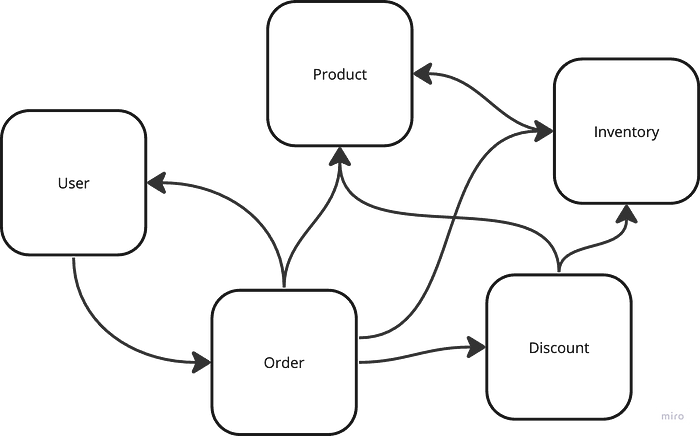

- Leverage dependency graphs: Visualize functionalities or monolith sections as nodes in a graph. Prioritize the node with the highest outbound arrows — the service on which others critically depend.

- Service-centric approach: Progress incrementally, building service upon service, ensuring dependencies are handled thoughtfully.

The path to effective decomposition pivots on methodical evaluation, prudent decision-making, and a meticulous execution plan. A well-orchestrated decomposition process paves the way for a coherent, streamlined microservices architecture.

No incremental migration

Our segue leads us to a closely related pitfall — the failure to adopt incremental migration. This misstep involves dissecting the monolith and instantaneously launching a multitude of microservices at the same time. To grasp the significance, consider a poignant analogy by Sam Newman: Just as one wouldn’t max out the volume before wearing headphones, a measured approach is pivotal. The rationale is crystal clear: most challenges surface in production, especially during the maiden voyage into a microservices architecture. This succinctly translates into “More Services” culminating in “More Pain.”

“If you do a big-bang rewrite, the only thing you’re guaranteed of is a big bang. — Martin Fowler”

While a complete overhaul might be suitable for simpler systems, it’s crucial that the migration process brings tangible benefits to customers. The reason for migration should be centered around enhancing user experiences. Furthermore, a comprehensive migration project can span several months or even years, similar to the timeline of initial development. Throughout this transformative process, the unwavering focus on developing new features remains of utmost importance.

Adopting an incremental approach over abrupt transformation serves as a beacon of prudence. This strategy shields your system from the turbulence of instantaneous upheaval, ensuring a more secure and navigable path toward a thriving microservices architecture.

One potent approach is the “Strangler Fig Pattern.” This pattern draws inspiration from the Florida Strangler Fig, a species of ficus known for its unique growth. The fig plants germinate on a tree branch, growing down and encasing the host tree over time.

Applying this analogy to migration, the methodology is clear. Begin by extracting services from the monolith, encasing it akin to the growing fig plants. Traffic redirection, facilitated through a proxy before the monolith, guides the gradual shift. Step by step, services are liberated from the monolith’s grasp, without duplicating or severing them. The integration of a feature toggle further empowers the choice between monolith or extracted service invocation. As new microservices mature, they progressively inherit features, redirecting functionality from the monolith to the microservices. This allows the new architecture to assume the reins while coexisting with the legacy system. Once everything has been migrated, the monolith can be retired.

Not considering customer journeys

The creation of entity-based services often leads to the perils of a distributed monolith. This situation, referred to as the entity trap, brings with it well-known disadvantages:

- Tight coupling between services, necessitating concurrent changes across multiple services.

- Challenges in independent deployment, demanding redeployment of multiple services for any alterations.

- Limited individual scalability, as increases in traffic on one service impact interconnected services.

- A compromised fault tolerance, exacerbated by network dependencies.

A promising solution emerges: shunning entity-driven divisions and embracing customer journey-oriented segmentation. This entails crafting services around genuine user scenarios, enriching user experiences.

However, accessing entities across services remains a dilemma — take the order service’s need for user data as an example. An elegant solution involves storing required data with each service — selectively and read-only. This symbiotic architecture harmonizes seamlessly with an event-driven model. Changes and entities are broadcast as events, fostering replica data updates without rigid dependencies.

This innovative approach brings significant benefits. Services achieve increased scalability and resilience, breaking free from inter-service constraints. However, this upward journey comes with trade-offs. While resilience and scalability grow, issues like eventual consistency and data duplication arise, adding complexity. Nevertheless, practical experience emphasizes that the advantages justify the strategic trade-off, leading to improved decoupling and system efficiency.

Not considering other architectural styles

Among the critical mistakes, we come to maybe the most significant one — neglecting alternative architectural styles.

An analogy from yesteryears resonates: “Nobody gets fired for buying IBM”. This saying, originating in the 1970s and 1980s, emphasized how managers tended to choose well-established and secure brands like IBM, a leader in the computing industry. The underlying idea was straightforward — familiarity and reliability often outweighed the temptation to explore unknown or risky options.

Interestingly, a parallel can be drawn in the context of microservices — a contemporary “IBM” of sorts. The key consideration isn’t whether to adopt microservices but, instead, to determine the architecture that aligns best with your specific business requirements. This raises already mentioned but important questions: What is the context of my business? Which architectural features best serve my needs?

In the book “Fundamentals of Software Architecture” by Mark Richards, he has made an effort to classify these architectural styles, including the conventional layered architecture and less conventional ones like the space-based architecture. I won’t go into the intricate details of these architectural styles at this moment, and I may not have an in-depth knowledge of all of them. Nevertheless, my main point is that the decision doesn’t boil down exclusively to choosing between monoliths and microservices. The pivotal question is about identifying the architecture that most suitably fits the specific use case.

The impact of the wrong architectural choice cannot be undermined — it manifests as a drag on efficiency and productivity for both individuals and organizations. Vital to note is the dynamic nature of architectural suitability; what fits now might not endure the test of time.

While we explore these aspects, it’s important to dispel a common misconception: the monolith is rarely the enemy. By broadening our perspective and considering a range of architectural styles, we empower ourselves to craft a resilient, future-proof foundation for our digital endeavors.

It’s time to build

In conclusion, as we’ve explored the potential pitfalls of transitioning from monoliths to microservices, it’s crucial to emphasize that there’s no one-size-fits-all answer. At &, we recognize the strengths of both monoliths and microservices. We understand that project requirements and preferences vary greatly. Our approach is to choose the architectural style that best fits the project, aligns with the client’s goals, and leverages our team’s expertise. This ensures the development of robust and scalable applications tailored to each project’s needs. In the dynamic field of software development, thoughtful architectural decisions are key to success. Rather than favoring one approach over the other, we carefully evaluate each project’s context and objectives. By avoiding common pitfalls and adopting a pragmatic approach, your projects can thrive with monoliths or microservices. Remember, success hinges on making well-informed architectural choices that align with your project’s unique demands.

Let me know your opinion, and feel free to reach out if you have any questions.